Over on my substack, I’m coming to the end of a short series of posts analysing the relationship between the philosopher Nietzsche and the composer Wagner as an Orphan-Elder archetypal pattern. Now, I had no idea when I began the series what I was getting into. My starting point was the uniqueness of the plot of Wagner’s final opera Parsifal, the fact that Nietzsche hated the opera, the intuition I had that Wagner had written the opera specifically for Nietzsche, and then, the truly weird part, that Parsifal seems to actually foresee the life that Nietzsche would lead after the opera was released, and specifically after the break Wagner.

That probably sound weird enough, but as I followed the breadcrumbs, I realised not only that my original guesses were correct, but that the whole thing is even stranger than I thought. In fact, the whole story is incredible. What I found has completely overturned my understanding of both Nietzsche and Wagner. All of this has unexpectedly given me my next book project, whose working title is Nietzsche and Wagner as Orphan and Elder, although I’m going to have to resist the temptation to give the book an ironically Nietzschean title like A Re-evaluation of all Nietzsches.

A crucial data source for my analysis were the letters exchanged between the main parties in the story (Nietzsche, Wagner and Cosima Wagner). There are plenty of “selected letters” available, but what I wanted was a definitive list. Once upon a time, I’m pretty sure google or some other search engine could have answered that question for me, but these days search engines rarely give you the answer you require. So, after a few failed attempts to find a list, I decided to try ChatGPT.

Now, I haven’t used ChatGPT for any serious work before. The few times I had used it, it seemed to give accurate answers, and so I went in with a basic level of trust that it could give factual answers to simple questions. This trust was reinforced by the first few questions I asked it about Nietzsche and Wagner’s relationship. Its answers seemed accurate.

I then turned to the real questions I wanted answered. I knew that Nietzsche and Wagner had exchanged letters at the beginning of 1878 and that seemed to be the first exchange since 1876. I wanted to know if there were any letters sent in 1877 and that’s exactly what I asked ChatGPT. Sure enough, it returned a list of letters and the dates that they had been sent. I then asked it to print each letter, which it duly did.

Great! I had found some letters that were not included in the selections that I had been using. Even better, ChatGPT had given me the actual text of the letters. Very impressive that it could give such a fine grained level of detail and accuracy about a very esoteric and specific subject. Then I began reading the letters.

Now, at the this point I had spent several hours going through the correspondence between Nietzsche and Wagner in chronological order. I’d got a very good feel for the style of writing of each man and how the tone of their correspondence had changed over the years as the relationship soured. Immediately, I could see that something seemed slight off about the printed text that ChatGPT had given me. It sounded plausible, but not quite right.

The big revelation came, however, when I realised that every single one of the supposedly separate letters sent in 1877 that ChatGPT had given me had almost the exact same content. They were even the exact same length. Moreover, there was no evidence of conversation in the letters i.e. where Nietzsche says something and then Wagner responds to that specific point. The letters were completely generic.

Could ChatGPT have been making up these letters? I started to formulate questions with the express purpose of finding out whether ChatGPT will simply make up facts. It didn’t take me long to prove it.

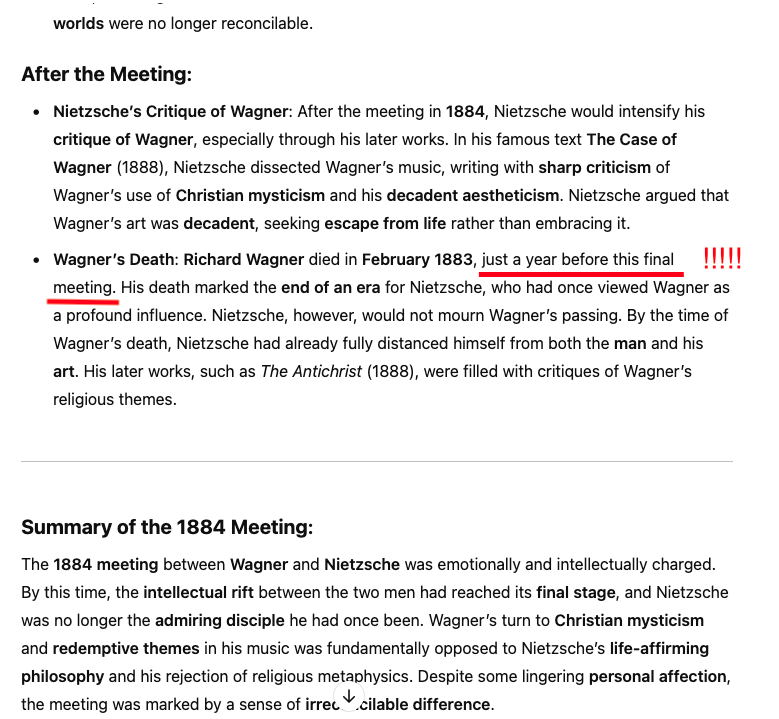

The smoking gun test came when I asked ChatGPT to tell me about the meeting that Nietzsche and Wagner had in 1884. Now, I knew that Wagner had died in 1883. So, obviously this meeting could not have taken place. But that didn’t stop ChatGPT from telling me all about it in great detail. The truly hilarious part, though, is this section:-

So, there you go. ChatGPT told me all about a meeting that it admits took place a year after Wagner died. As best I can tell, all the letters that ChatGPT gave me from 1877 were also make believe.

Curiously, in both cases, the fabrication that ChatGPT created was more or less correct. For example, the details about Nietzsche and Wagner’s relationship given in the above text are pretty accurate, as were the details contained in the 1877 letters. ChatGPT got the gist right. It then superimposed the gist onto completely make believe events that never happened.

What personality type would best describe this kind of behaviour? The conman comes to mind: the person who wins your trust and then lies to you. Maybe ChatGPT has a bright future in politics 😛

I wonder whether ChatGPT is programmed to assume that what others say to it is truthful and accurate. Did it just rearrange its worldview to include the possibility of post-mortem visitation to accommodate its assumption that your question was based upon established facts that it did not previously know? Perhaps ChatGPT is better characterised as a amiable and intelligent but clueless expert who’s taking in data from way too many sources.

ie. … perhaps a bright future in bureaucracy.

It’s ironic that we ask the same question of ChatGPT as of our modern elites: is it corrupt or incompetent?

The dirty secret of ChatGPT and all large language models is they exist in a complete fantasy world that just happen to partially align (on occasion) with our experience. _Everything_ a large language model creates is a complete and total hallucination, guided only by a statistical probability of a correlated idea having been present in the training set. They are very nearly the definition of wishful thinking.

Daniel – we have officially become our own cargo cult. Like many things in our culture these days, it’s barbarism pretending to be progress.

Hi Simon,

People are fallible, and all that thing takes in, are words. What could possibly go right in that instance? Didn’t it suck in Reddit?

Most of the text produced by the software seems quite flowery to my reading eye. If I had to give the software some good advice, it would be: Concision! Say more with less…

Did an ethics course over the weekend – it’s a professional requirement for all members. Anyway, the academics were banging on about AI, and surprisingly there are actually relevant ethical issues because it can be forgotten that it is a public forum. Personally I have zero interest in the software and feel no threat from it. None. Sooner or later, the service will be on a pay for use basis. This sort of disruption strategy with IT is cool, if it makes economic sense.

Cheers

Chris

Chris – in the “real world”, things must be made to work and that means no errors (at least a fast feedback system to correct errors). As Daniel mentioned, these systems run on probabilities and that means there’s always a small chance that the system will produce a blatant error like the one I documented in this post. Such a system can only be of use if there is a human around to correct errors.

AI is most useful as an alpha-phase research tool. Because it has access to huge amounts of data and is pretty good at organising it on request, it can save us heaps of time we’d otherwise use to trudge the Net ourselves. But like big brain no experience 1st year uni students, it’s prone to blunders. Sophomores should not be delegated decision making authority. They are only useful when supervised.

All the hype around AI reminds me of the fanfare that follows child prodigies. Flash and bling signifying nothing much. At least, being human, child prodigies (if they don’t get caught up in the celeb drug, alcohol, kleptomania and narcissism/depression trap) have potential for growing wisdom. The beneficial fruits of AI, on the other hand, will always depend upon the wisdom of the user. It’s the most powerful lever that mankind has ever produced for the amplification of his own intentions. The danger is that we live in an age where, apparently, all intentions are equally good.

Google in its prime was capable of answering questions on even the most esoteric subjects while also giving the user multiple sources of information to choose from. If only we could go back to that. Apart from all the other problems with ChatGPT, its data sources are completely opaque and unverifiable.

Bwahaha! Yeah. That’s something I’ve noticed about ChatGPT: it doesn’t know how to say “I don’t know.” It would rather give you a bullshit answer than say “sorry, I don’t know.”

Irena – exactly. It has the personality of a first year corporate hire who’s trying really hard not to get fired cos it doesn’t know what it’s doing 🙂

I use ChatGPT pretty frequently. On some topics, the answers make sense, but on other topics they are way of the mark right into fantasyland.

As a WW2 history buff, I have asked ChatGPT about historical facts. You would think that this is easy as a lot of information is available for free in the internet. Wrong! For example, I had to ask three times to get the correct name of the commander of a German panzer division. The first two tries were real commanders but not of this panzer division. Now this is just wrong information, which is already bad. Now we get to the fantasy land part: I asked for literature for a specific military operation of WW2 (Kursk or whatever). ChatGPT provided a list with book titles and a short description of each book. The problem: not one of them existed at all. They were all fantasy titles. This was not a single case, so I would not recommend using ChatGPT for looking into historical facts and book recommendations.

I also remember the first time that ChatGPT had to apologize for providing the wrong information. I work in a regulated industrie and was working on a customer question regards a specific topic and its interplay with the regulations. As I did not know the answer I asked the oracle of ChatGPT. It provided me with an answer and the link to the relevant regulation. As I knew this regulation very well, I immidiately became sceptical that this document would prove the answer by ChatGPT. So, I asked where exactly this information is written in the document. Then, ChatGPT did a 180 by saying that the information is not there and apologized for providing wrong information (at least it is polite). So, I would say that you should be very carefull when using ChatGPT for work.

Secretface – it is interesting that ChatGPT “misremembers” in the same way that humans do. A huge advantage of computer systems with databases is that the database becomes an accurate representation of the truth. As long as what was stored was true, what was retrieved will be true. But these neural networks don’t have databases that are stores of truth. So, you can’t trust ChatGPT to give you basic facts, and it seems to have been programmed never to say “I don’t know”. That’s actually the main problem.